Engineers create a metamaterial that senses spoken words without the need for a battery. So far it can classify which of two words in a pair was spoken.

By Setare Hajarolasvadi

The electronics we depend on every day, such as phones or laptops, are all examples of digital computers—devices that process data in discrete form. Before the advent of digital electronics, however, mechanical systems were used to process information through measuring continuous variations in physical phenomenon, a process called analog computing. Examples of analog devices range from the Antikythera mechanism, used by the ancient Greeks to predict astronomical positions and eclipses, to the differential analyzer, constructed first by Harold Locke Hazen and Vannevar Bush. Unlike their power-hungry digital descendants, these machines were low on power consumption. However, they were physically large and had limited precision; this ultimately led to their demise.

Now, advances in the design of phononic metamaterials combined with the urge for ultra-low-power artificial intelligence (AI) has brought back analog computing devices; this time, miniaturized and accurate. A team of collaborating researchers from ETH, EPFL and AMOLF has designed a phononic metamaterial that can perform a binary classification for different pairs of spoken words.

What are phononic metamaterials?

Phononic metamaterials are engineered structures that can manipulate acoustic waves, such as speech signals, in eccentric ways that cannot be achieved using natural materials. Their construct usually consists of repeating geometric patterns and resonant elements. Resonators in a mechanical system are components that vibrate at large amplitudes when excited with certain frequencies; similar to how a guitar string vibrates when plucked. Resonances are characterized by a term called quality factor. The longer it takes for a resonance to die away (for the guitar string to stop vibrating), the higher the quality factor.

Dr. Marc Serra-Garcia, currently tenure-track group leader at AMOLF, and one of the lead researchers on the team, says that the idea for the sensor was inspired through leveraging the high quality factor of phononic resonators: “Phononic resonators have quality factors that are many orders of magnitude larger than their electronic counterparts. These resonators can vibrate for a very long time before losing a significant amount of energy. This makes them perfect candidates for low-energy signal processing applications. When we came to this realization, we didn’t have a clear idea about how we can best exploit this property, but we were certain it had a lot of potential.” And it certainly did!

RELATED: Touchscreen Tech Can Sense Tainted Water

How does the device work?

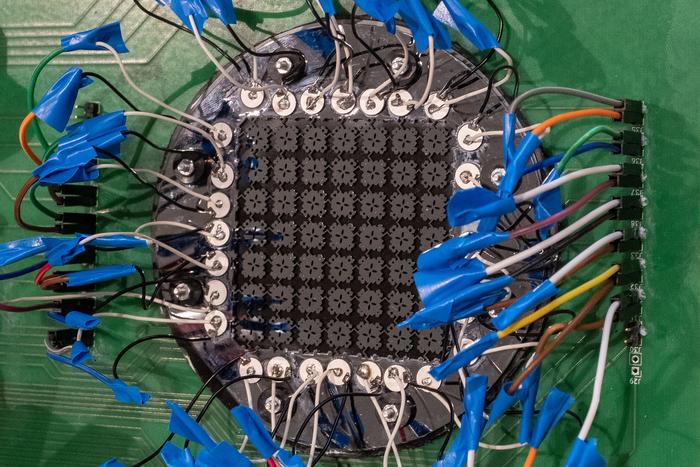

The miniature battery-less sensor consists of repeating non-periodic patterns of perforated plates—acting as phononic resonators—and coupling beams that allow for energy transfer from one plate to another. The task of the sensor is to distinguish between pairs of spoken words, such as “one” and “two.” This is often referred to as binary classification. The goal is achieved by monitoring how much energy is transferred to a specific location on the metamaterial device when it’s excited by utterances of the two words.

The unique geometric design of the device allows for the transmission of energy when excited with one word but not the other. To find this unique geometry, researchers first represented the geometry using a set of parameters, such as perforation size and beam locations. Then, they simulated how the device’s response changes when excited with utterances of spoken digits from the Google Speech Commands Dataset. The dataset contains over fifty thousand utterances of thirty short words by thousands of people. The optimization algorithm identifies the geometry that maximizes the accuracy of the classification task. The final model attained an exceptional classification accuracy above 90 percent.

The researchers fabricated a sample on a silicon wafer that would perform classification between the paired words “three” and “four.” “The device is, in a sense, like the rock in the story of Ali Baba and the Forty Thieves,” Serra-Garcia says jokingly. “It’s just a piece of silicon with no electronics whatsoever. Yet, similar to how that rock would move to open a cave in response to Open Sesame, this device performs a classification task in response to utterances of words.”

RELATED: How Children Learn Language

Future directions

Speech recognition has widespread applications, such as voice dialing and data entry (e.g., reading out the digits of an order number or a credit card). The task is one of the applications of embedded machine learning. Embedded machine learning, also known as TinyML, refers to using embedded systems for gathering real-time data, such as audio signals, that can be analyzed by machine learning algorithms for event detection or prediction tasks.

Embedded systems, e.g., sensors or fitness trackers, are usually smaller, less powerful, and have lower memory. One of the main challenges with the design of such smart systems is minimizing their power consumption. Therefore, the possibility of performing such tasks using zero-power mechanical devices would have high significance. Additionally, there are growing concerns around the environmental impacts of AI. Many IoT devices, including cochlear implants, smartwatches, and wristwear, use batteries. These batteries usually do not last very long. EnABLES researchers recently warned that about 78 million batteries powering IoT devices will be discarded every single day by the year 2025. Therefore, there’s a need to develop green technologies with minimal environmental footprints.

When asked about where he sees the future of the metamaterial research going, Serra-Garcia responded: “This device serves as a proof of concept. We’re currently working on designs that can perform more complicated classification tasks, such as multi-class classification or classification for a group of words, instead of only two. Theoretically, you should be able to have completely passive IoT devices. However, in practice, there are serious challenges that we need to overcome for such technology to be used in real products. Considering everything we’ve discussed, I think there’s a case to be made for analog devices and we’ll continue our research to materialize it.”

This study was published in the peer-reviewed journal Advanced Functional Materials.

RELATED: Learn more about science communication with Get to Know a Scientist: Science Communicator Amanda Coletti

References

CORDIS. (2021, July 23). Up to 78 million batteries will be discarded daily by 2025, researchers warn. https://cordis.europa.eu/article/id/430457-up-to-78-million-batteries-will-be-discarded-daily-by-2025-researchers-warn

Dubček, T., Moreno-Garcia, D., Haag, T., Omidvar, P., Thomsen, H. R., Becker, T. S., Gebraad, L., Bärlocher, C., Andersson, F., Huber, S. D., van Manen, D., Villanueva, L. G., Robertsson, J. O. A., Serra-Garcia, M. (2024). In-sensor passive speech classification with phononic metamaterials. Advanced Functional Materials. https://doi.org/10.1002/adfm.202311877

Featured image: Astrid Robertsson / ETH Zurich

About the Author

Setare Hajarolasvadi is an acoustic research engineer with Sigma Connectivity, working closely with the Reality Labs Research Audio team at Meta. She got her PhD from the University of Illinois at Urbana-Champaign. Currently, her research focuses on mathematical and numerical modeling for verification and validation studies within the AR/VR domain. Her PhD research involved studying the physics of how waves and vibrations propagate in complex structures. Setare is passionate about science communication and making scientific research available to the public. In her free time, she enjoys exploring nature, reading, and playing the piano. Follow her on LinkedIn.